Enhancing Data Insights with Snowflake and LLM Integration

Revolutionizing SQL Query Generation with Snowflake and LLMs

Leveraging LLMs for Enhanced Data Analysis in Snowflake

The integration of Large Language Models (LLMs) like GPT and Snowflake’s Query Engine is transforming the landscape of data analytics and SQL query generation. This blog is a detailed exploration that delves into the current advancements, including leveraging open-source LLMs like CodeLlama and RAG for building efficient SQL co-pilots and the use of techniques such as schema feeding and few-shot learning for complex queries.

Understanding the Power of LLMs in Snowflake Integration

Exploring the Benefits of Enhanced SQL Query Generation

Optimizing Data Insights with Snowflake and LLMs

Integrating LLMs into Snowflake for Advanced Analytics

Snowflake and LLM Integration

A paradigm shift in data querying Snowflake’s robust platform, integrating LLMs like CodeLlama-13B and RAG, offers unparalleled capabilities in generating SQL queries from natural language inputs. This synergy enables a seamless translation of user queries into precise SQL commands, bolstered by the LLM’s understanding of complex queries powered by RAG and few-shot learning.

In-Context Learning Techniques: Schema Feeding & Few-Shot Learning

Innovative techniques like schema feeding and few-shot learning have been pivotal in enhancing the SQL co-pilot’s capabilities. These methods enable the model to grasp the structure and intricacies of complex databases, allowing for the generation of more accurate and relevant SQL queries based on natural language inputs.

Retrieval-Augmented Generation (RAG) in SQL Query Generation

RAG is an essential component in enhancing LLM outputs for SQL query generation. By integrating external knowledge bases, RAG allows LLMs to generate more accurate and contextually relevant responses. This approach is especially beneficial in cases where the static training data of LLMs may not be sufficient to address specific or complex queries, Examples fed through RAG help the llm perform better in these scenarios.

Introduction to Snowflake and LLM Integration for Data Analysis

Exploring the Efficiency of SQL Query Generation with LLMs

Use Cases for Snowflake and LLMs in Data Insights

Best Practices for Leveraging LLMs for Enhanced Data Analysis

Building an Efficient SQL Co-pilot with Open-Source LLMs

The development of the Snowflake SQL co-pilot, a state-of-the-art text-to-SQL LLM, exemplifies the potential of integrating LLMs with Snowflake. With challenges such as feeding realistic datasets, reflecting complex real-world database schemas, and tailoring models to Snowflake SQL’s unique capabilities having been addressed, a more robust and efficient SQL co-pilot, capable of handling complex, real-world SQL queries with higher accuracy has emerged.

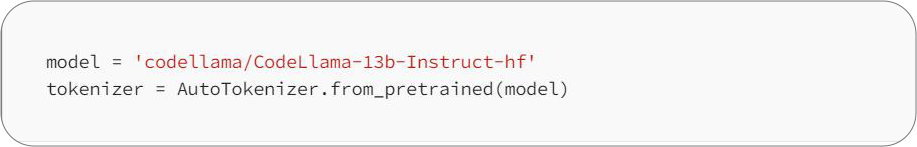

Model Selection and Tokenization:

- We begin with the selection of the model, ‘codellama/CodeLlama-13b-Instruct-hf’, renowned for its instructive capabilities in generating SQL queries.

- The AutoTokenizer from the transformers library is used for efficient tokenization, ensuring that the inputs are correctly formatted for the LLM.

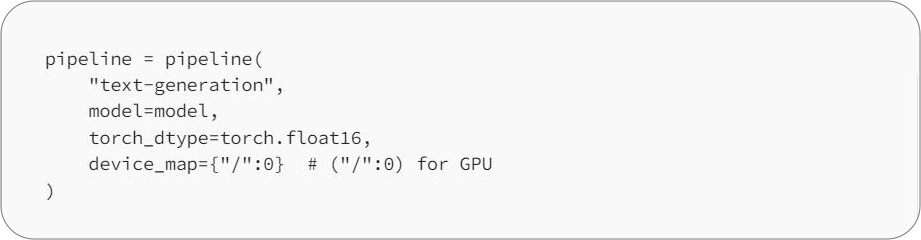

Setting Up the Pipeline:

- A pipeline for text generation is established with the selected model.

- The configuration includes specifying the device for computation (GPU or CPU) to optimize performance.

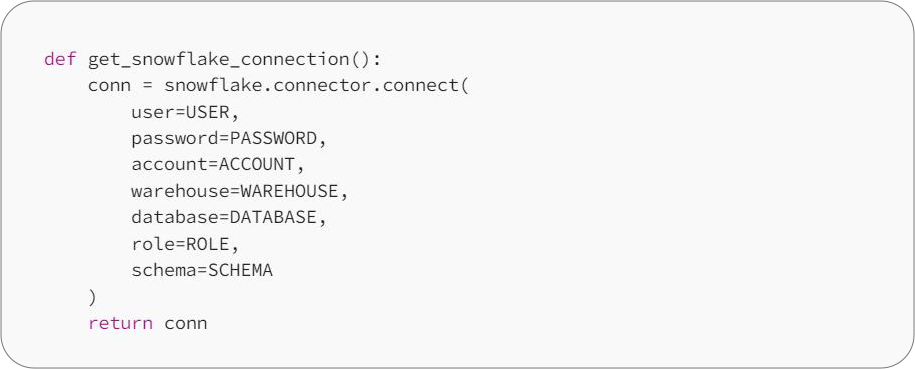

Snowflake Connection:

- Establish a connection to the Snowflake database, crucial for executing the generated SQL queries and retrieving table details.

- Credentials and other connection parameters are securely configured.

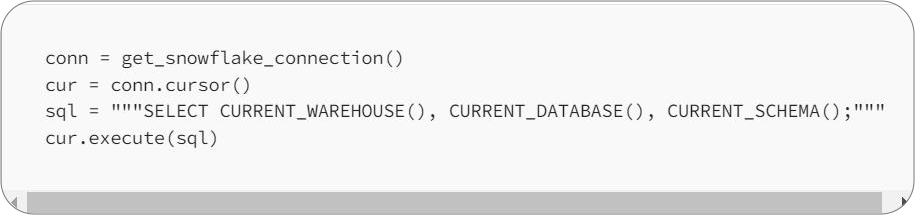

Executing Queries in Snowflake:

- Now we use the established connection to execute queries and interact with the Snowflake database.

- This step includes fetching current warehouse, database, and schema details.

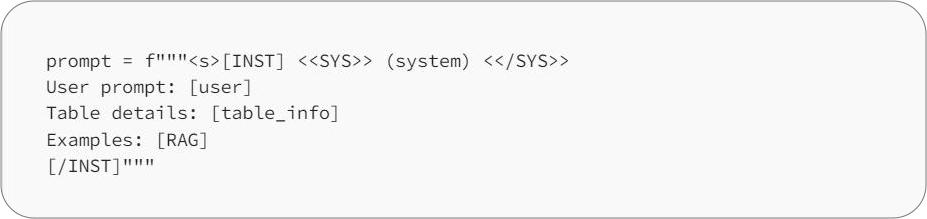

Query Generation with LLMs:

- Build a prompt that instructs the LLM to generate a SQL query based on user input and table details.

- This involves using the LLM’s understanding of the database schema and user requirements.

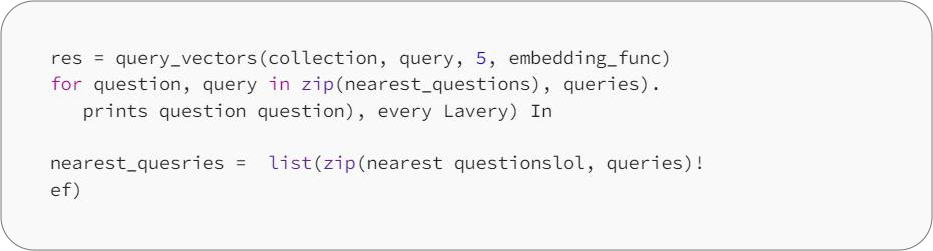

RAG:

Use RAG to search and fetch similar SQL queries to the user’s query from vector db collection to feed to the model as in context learning.

Query Execution and Response Retrieval:

- Once the SQL query is generated by the LLM, it is executed in Snowflake.

- The responses are then retrieved and presented in a user-friendly format.

Key Considerations

- Accuracy and Relevance: The integration focuses on ensuring that the generated queries accurately reflect the user’s intent and are coherent with the database schema.

- Real-world Application: The system is designed to handle complex, real-world SQL queries, moving beyond simplistic textbook examples to address practical business needs.

- Continuous Improvement: The SQL co-pilot needs to undergo regular updates through data or finetuning and refinements to enhance its capabilities and adapt to evolving database technologies.

Evaluating SQL Queries Generated by LLMs

The evaluation of SQL queries generated by LLMs is critical. This involves assessing the reliability and quality of the generated SQL query, where methods like black-box and white-box approaches, along with verbalized confidence assessments, play a crucial role. These evaluations ensure that the generated queries are not only syntactically correct but also semantically aligned with the user’s intent.

Black-Box Methods: Black-box methods are essential for evaluating LLMs when there’s limited access to their internal states. They can be broadly classified into three categories:

- Consistency Methods: These methods, inspired by ensemble learning, involve generating multiple responses for a given query and analyzing the consistency across these responses. They can better capture uncertainty and confidence compared to verbal approaches, though they can be computationally intensive.

- Verbal Approaches: These methods leverage the LLMs’ inherent capabilities to generate a confidence score for the SQL query. The model is prompted to assess its confidence level in the query it has generated. However, LLMs may show a tendency towards overconfidence, making these approaches less reliable.

- Hybrid Methods: Hybrid approaches combine the strengths of both consistency and verbal methods. They provide a more nuanced estimation of the model’s confidence, although they can be complex and resource intensive.

UI Flow: Streamlit and Snowflake:

The integration of Streamlit with Snowflake provides an interactive environment for developing and deploying LLM-powered applications. This allows for quick prototyping and implementation of applications like the SQL co-pilot, where LLMs interpret user questions in natural language and generate corresponding SQL queries.

Conclusion

The integration of Snowflake’s Query Engine with LLMs like Llama 2, GPT with RAG represents a significant advancement in the field of data analytics. This combination offers a powerful tool for transforming natural language queries into precise SQL commands, enabling businesses to unlock new insights and enhance their data processing capabilities.

Media Coverage

Media Coverage Press Release

Press Release