Langchain vs Llama Index

Unveiling the Showdown: Langchain vs Llama Index

As large language models (LLMs) continue to advance AI’s scope and capabilities, developers need robust frameworks to build LLM-powered applications. To this end, two of the most popular options available to them are Langchain and Llama Index. Good as they are, they take different approaches. In this in-depth comparison, we contrast the key features and use cases of each framework so you can determine the best fit for your needs.

Whether you aim to create a flexible conversational agent or an efficient search index, understanding the strengths of Langchain’s modular architecture versus Llama Index’s specialized retrieval makes the difference in achieving success.

Understanding Langchain: Strengths and Weaknesses

Analyzing Llama Index: A Closer Look

Future Prospects and Predictions via Langchain vs Llama Index

But before getting into the differences, let’s get a brief overview of both Llama-index and Langchain first.

Langchain

Langchain offers a robust framework that simplifies the development and deployment of LLM-powered applications.

It offers a modular and extensible architecture that empowers developers to seamlessly combine LLMs with various data sources and services. This flexibility enables creation of a wide range of applications that leverage the unique capabilities of LLMs.

It is a more general-purpose framework that can be used to empower developers to unlock the full potential of LLMs in their application.

The Rise of Langchain: Market Dominance or Fluke?

Llama Index’s Innovation: A Threat to Traditional Players?

Market Reaction and Expert Opinions on Langchain vs Llama Index

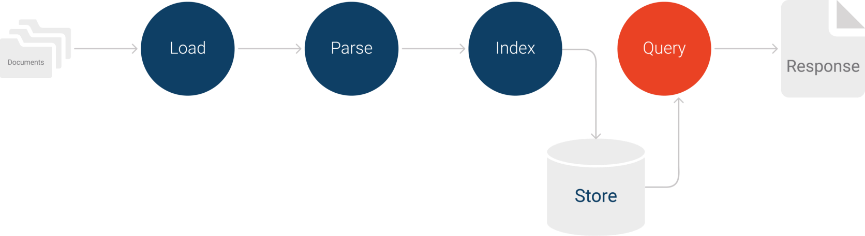

Llama Index

Llama Index is specifically designed for building search and retrieval application. It provides a simple interface for querying LLMs and retrieving relevant documents. If you are building a search and retrieval application that needs to be efficient and simple, then Llama Index is a better choice.

Llama Index is the essential bridge between your data and powerful language models, streamlining data tasks with user-friendly features.

LlamaIndex offers a diverse set of features that make it a powerful tool for building LLM applications.

If you are in the process of constructing a versatile application utilizing expansive language models and aiming for adaptability, seamless integration with various software, and unique functionalities, Langchain makes for an excellent choice. Its design ensures a high degree of flexibility, making it suitable for a wide range of applications. On the other hand, if your project revolves around the development of a streamlined search and retrieval application, prioritizing efficiency and simplicity while concentrating on specific tasks, then Llama Index emerges as the preferred option. Each choice is distinct in its approach and purpose, ensuring a tailored fit for your specific needs.

Node Parsers and Sentence Splitters

Both Llama Index node parsers and Langchain sentence splitter deal with splitting the sentence into chunks but they have different scopes and functionalities:

Llama Index Node Parsers: Convert document into individual “nodes” for indexing and search. They establish relationships between these nodes, providing context for the information.

Langchain Sentence Splitters: Divide text into individual sentences primarily for language processing tasks like translation, summarization, and sentimental analysis

Choosing the Right Tool

- For indexing and search: Use Llama Index node parsers to create context-aware representations for your data.

- For language processing tasks: Choose Langchain sentence splitters to accurately break down text into individual sentences.

- For specific needs: Consider using both tools in conjunction. Llama Index provides powerful node management, while Langchain offers language-specific sentence detection.

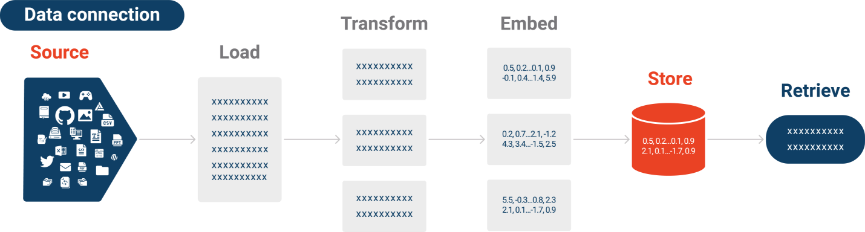

Embedding

The embedding functionality and the focus are different in both Llama Index and Langchain.

Llama Index focus on indexing and retrieval. Llama Index primarily focuses on creating a searchable index of your documents through embeddings. This index is built using a separate embedding model like text-embedding-ada-002, distinct from the LLM itself. Whereas Langchain focuses on memory management and context persistence. Langchain focuses on maintaining contextual continuity in LLM interactions, especially for long-term conversations. It uses embeddings internally, but the process is integrated with the LLM.

Benefits of Using Llama Index Embeddings

Benefits of Using Langchain Embeddings

Cost – The Key Difference

The major difference between Langchain and Llama Index we found is the cost!

Using OpenAI embedding, embedding cost was experimented on both Langchain and Llama Index. It was found that embedding 10 document chunks took $0.01 using Langchain whereas in Llama Index embedding 1 document chunk took $0.01. So Langchain is more cost effective than Llama Index.

Another difference is that Llama Index can create embedding index. So, retrieving those embeddings when a query is asked will be easier in Llama Index than in Langchain.

The Better Choice?

It all boils down to your requirement and use case.

But given that Langchain embeddings are slightly better than Llama Index embedding process and more economical and easier in terms of use, we’d say Langchain edges out Llama Index.

That said, if you are building an application where retrieval from the embedding is key, then we would recommend you to go with Llama Index.

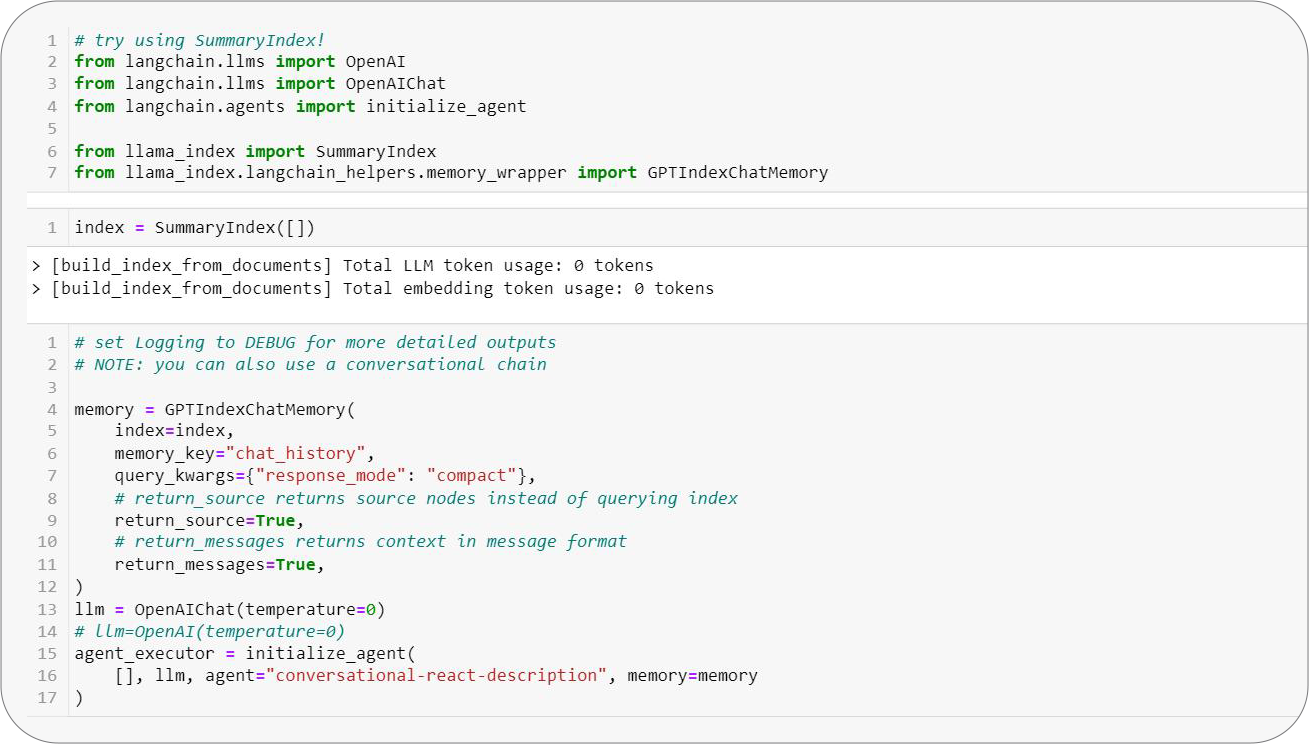

But, Why Choose?

Langchain and Llama Index are NOT mutually exclusive and you can use both tools together to achieve your desired outcome. So why choose? Why not combine both tools together to achieve your desired outcome?

Langchain for Chaining and Agents

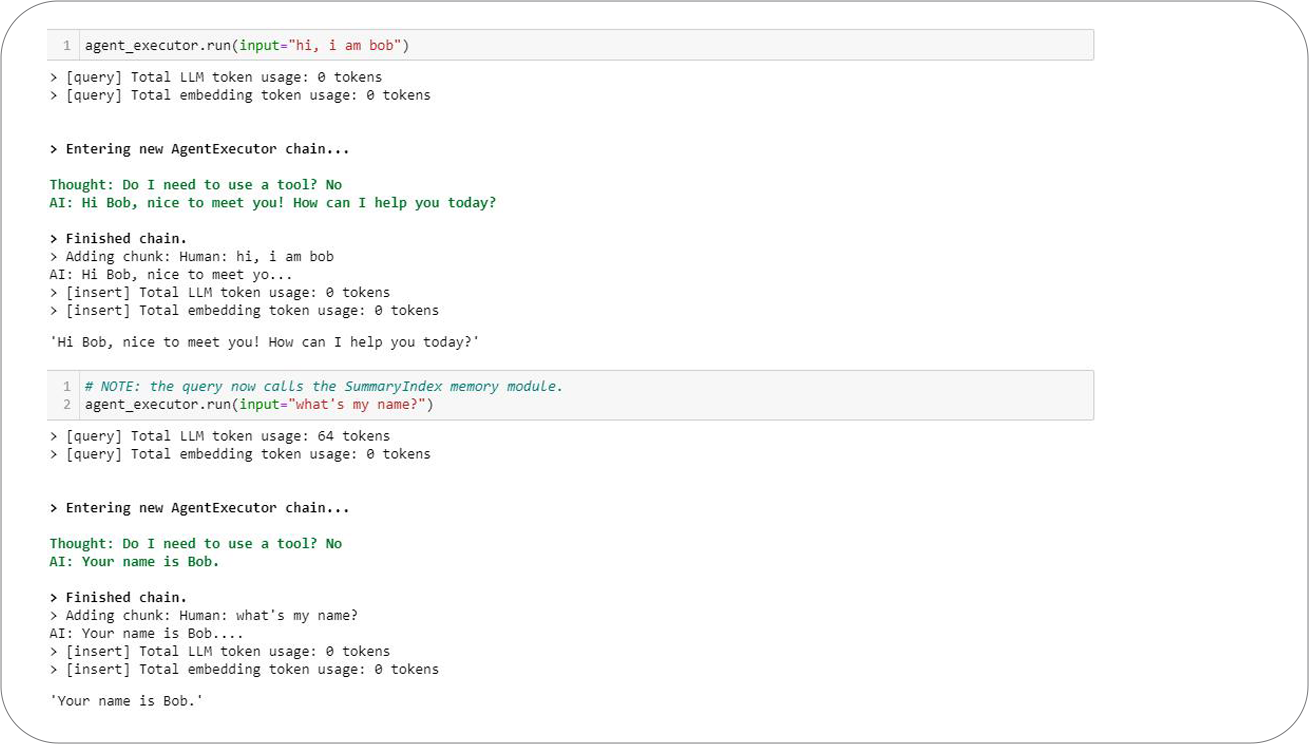

Using Llama Index as a Memory Module

With LlamaIndex serving as a memory module, you can add an extensive back-and-forth interaction history when engaging in a chat session with the Langchain bot. This dynamic feature ensures that previous conversations are readily accessible for more personalized and contextually rich interactions. In closing, both Langchain and Llama Index offer powerful capabilities for integrating LLMs into custom applications. While Langchain provides greater flexibility for chained logic and creative generation, Llama Index streamlines the search and retrieval process through efficient indexing. Ultimately the needs of your specific use case should guide your framework choice. In many instances, using these tools in a complementary fashion unlocks the full potential of LLMs in your solution. We encourage you to consult this guide as you architect the next generation of AI-powered software.

Media Coverage

Media Coverage Press Release

Press Release