RAG-Powered Named Entity Recognition (NER)

Building Custom Models with RAG and NER

Introduction to RAG-Powered NER Techniques

USEReady’s Guide to Custom Model Building

Named Entity Recognition (NER) is an important component of Natural Language Processing (NLP). NER helps with the recognition and categorization of predefined entities such as names, dates, locations, etc. present in the given text input. However, developing a NER solution which is both efficient and effective, can handle complicated input and can be well-generalized across different domains is a difficult and challenging task. A custom NER model however can be extremely useful tool for information extraction applications. Possible ways of doing this include training a traditional Machine learning NER model and finetuning a large language model (LLM).

Introduction to RAG and NER

Benefits of RAG for Custom NER Models

Step-by-Step Guide to Implementing RAG-Powered NER

Real-World Applications of Custom NER Models

Overview of RAG for NER

Key Steps for Building Custom Models

Advanced Techniques for NER Optimization

Case Studies in RAG-Powered Model Implementation

Real-world Applications

NER is frequently used to extract important information from unstructured text in a variety of industry sectors. In healthcare, for instance, it helps in extracting patient details from clinical notes, enabling better patient management. To support market analysis in the finance industry, NER helps extracting significant financial events from news items. NER is used by customer care systems to effectively process and route consumer inquiries. These use cases showcase just how crucial, reliable NER systems can be in practical situations.

Traditional and LLM-based NER Model Challenges

Whether it is Traditional ML-based or a LLM based model, both approaches have their own challenges. These include:

RAG to the Rescue!

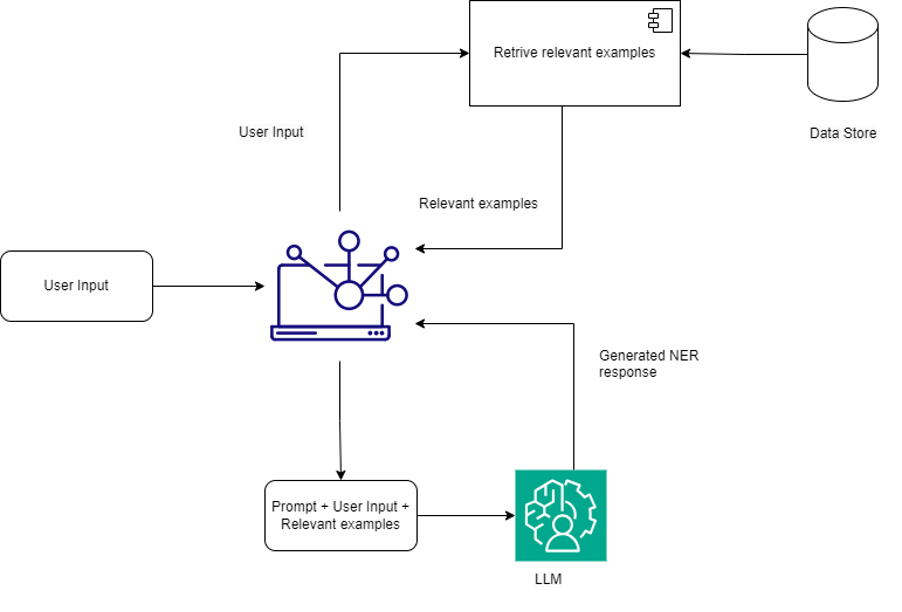

A potential solution for these issues is a Retrieval Augmented Generation (RAG) based approach. RAG models combine the strengths of retrieval and generation techniques:

Practical Implementation

Implementing a RAG-based NER system involves a few key steps:

A Scenario

Let’s consider a hypothetical case where a legal firm implements a RAG-based NER system to classify and identify legal entities from contracts. Initially, a small set of high-quality annotated examples, covering a range legal terms and contexts, was created. The retrieval system was set up using Pinecone to fetch relevant examples based on the input text. By integrating these examples with the user’s input and using GPT-4 for generation, the firm observed significant improvements in the accuracy and flexibility of their NER system, while reducing the need for extensive retraining.

Some Drawbacks

While RAG based NER offers many advantages, it’s important to take into account some potential drawbacks:

Conclusion

In essence, a RAG-based NER system allows you to create custom NER models with just a handful of high-quality samples. The model then leverages its retrieval capabilities to find relevant supporting examples based on the user’s specific inputs. This significantly reduces the time, cost, and resources required to build effective NER systems.

By combining human expertise in crafting relevant examples with the power of retrieval and generation, RAG offers a promising approach for building custom NER models that are both efficient and adaptable.

Media Coverage

Media Coverage Press Release

Press Release