Unleashing the Power of Data Vault 2.0: Revolutionizing Data Warehouse Modeling

Data Vault 2.0: A Comprehensive Guide to Modern Data Warehouse Architecture

Building Future-Ready Data Architectures with Data Vault 2.0

We are living in a world where technologies are driving the process of the business. AI, ML, Big Data etc. are proving to be catalyst in this revolutionizing journey. Businesses have also realized that digitization is the way forward and are not hesitant to experiment with new technologies and new processes.

The world is changing rapidly, and so is the process and technology. This has shaken data management teams at the core. The approach adopted since last five decades are not helping to hold, manage and process data to support this dynamically changing environment.

Lot of effort is going towards maintaining the historical data and merge new data sets coming in from rapidly changing environment adopted to support business.

Understanding the Core Principles of Data Vault 2.0

Benefits of Adopting Data Vault 2.0 for Your Data Warehouse

Implementing Data Vault 2.0: Best Practices and Considerations

Overcoming Challenges in Data Warehouse Modeling with Data Vault 2.0

Is there a more efficient way to store, manage and process data for business use?

Yes, Data Architects are trying to keep up the pace with the demands of data storage and processing. The approach that we are going to talk about is Data Vault.

Data Vault is a data modelling method based on mathematical principles devised by Dan Linstedt to solve the current challenges with 3-NF and de-normalised modelling for EDW.

The modelling approach is efficient enough to accommodate data generated by changes in process and technology/platform.

Let us see how the Data Vault modelling technique maps to Simon Sinek’s famous golden circle.

WHY: Enhanced approach to tackle needs of future data warehouse demands and fix challenges in the current setup

Introduction to Data Vault 2.0: Evolution of Data Warehouse Modeling

Key Components and Concepts of Data Vault 2.0 Architecture

Data Vault 2.0 vs. Traditional Data Modeling Approaches: A Comparative Analysis

Real-World Examples of Successful Data Vault 2.0 Implementations

The business is changing, source systems are changing and adding, and advancement of Big data technologies wants to pump data into the data warehouses (DW). The current DW modelling methods are not robust enough to support that. Any new source changes/introduction is having tsunami impact on the current DW infrastructure for storage and process.

3-NF approach have following challenges with tides are high; time depended primary key leading to parent-child complexities, difficulties in near real time data loading, complex query formation for analytical needs, challenges in scalability. All these challenges are because the approach of 3-NF is technology focused approach. On the other hand, with denormalized conformed data mart, we face difficulties with subject oriented, data redundancy, synchronization issues.

One of the major challenges, we face with granularity issues as conformed data mart inherits no robustness to adopt to changes in the granularity requirements.

In a nutshell, the 3-NF approach is technology platform focused while conformed data mart is business focused.

HOW: Devise data modelling approach to resolve issues with traditional approach (3-NF and Denormalized)

The approach was devised by Dan Linstedt that takes best of 2 world and club it one. The focus was not solving the concerns of traditional method; instead, a positive approach to take best of both world and combine it. This benefit both technology and business teams of an organization.

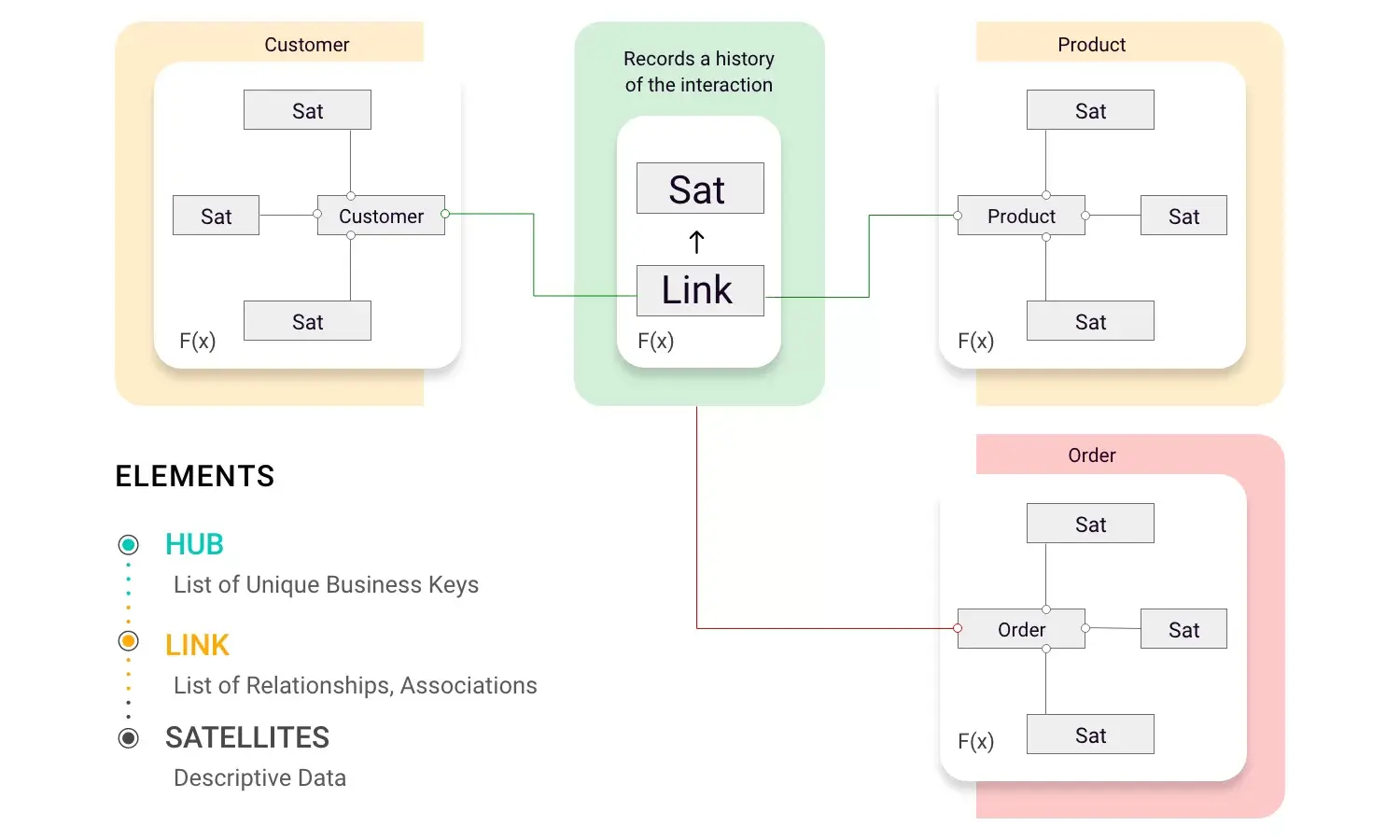

WHAT: What are the components of Data Vault?

There are 3 foundational entities to Data Vault namely HUB, LINKS and SATELLITE. The approach is devised focused on functional areas of business with HUB representing the primary key. LINK entities provide the transactions integration between the HUBS while SATELLITE entities support with the contextual information around the HUBS.

HUBS: Hub entities are single table carrying at minimum a unique list of business keys. These are the keys that the businesses utilize in everyday operations.

For example, invoice number, employee number, customer number, part number etc. This makes sure the approach must be business function focused. The other attribute in HUB includes:

- Surrogate Key (Optional).

- Load Date Time Stamp: to record the key landing date time in the warehouse.

- Record source: To track the source system from which the key was loaded to help data traceability.

For example, the requirement is to capture customer number across the company. Accounting may have a customer number (12345) represented in a numeric style and contracts may have the same customer number prefixed with an alpha (AC12345).

LINKS: Link entities is the representation of many to many relationships (as in 3-NF). The links represents the transaction of relationship between the business components. The LINKS contains the following attributes:

- Surrogate key (optional)

- HUB 1 key to HUB N key: The keys to migrated into the LINKS that represents the relationship between the HUBS.

- Load date time stamp: recording the relation/transaction when it was created for the first time.

- Record Source: The source system that defined the relation/transaction.

SATELLITE ENTITIES: Satellite entities are the entities that gives descriptive context to the HUBS. All the information that is subject to change over a time. This makes model robust enough to capture and store the altered values at most granular level. The satellite holds the following attributes,

- Satellite Primary Key: Hub primary key migrated to satellite from hub or a link.

- Satellite Primary Key: Load date time stamp recording when the context available in the warehouse (The new row is recorded SCD 2)

- Satellite Optional Primary Key: Sequence Surrogate Number – utilized for Satellites that have multiple values (such as a billing and home address), or line-item numbers, used to keep the Satellites sub-grouped and in order.

- Record Source: The source system that defined the relation/transaction.

Data Vault’s Conceptual Representation

Here is the conceptual model for reference.

HUBS acting as centre view driving the business-critical entities and LINKS defining how are HUBS related or any transaction conducted between the HUBS.

There is a standard step that has been defined to come up with the conceptual Data Vault model.

The steps are as follows:

- Model the Hubs: This requires an understanding of business keys and their usage across the designated scope.

- Model the Links: Forming the relationships between the keys – formulating an understanding of how the business operates today in context to each business key.

- Model the Satellites: Providing context to each of the business keys as well as the transactions (Links) that connect the Hubs together. This begins to provide the complete picture of the business.

- Model the point-in-time Tables: This is a Satellite derivative.

How USEReady Can Help

USEReady is an award winning, data transformation consultancy that applies best practices and technology to deliver solutions that help our customers succeed with data. We bring deep expertise and skills in data warehouse modelling, moving data systems to cloud, and data vault architecture.

We have partnered with leading data cloud platforms such as Snowflake and have accelerators and expertise in building and implementing Data Vault in line with customers’ reference architecture. Through our holistic approach, expertise in modern data platforms and our Analytics Maturity Framework; we enable customers to accelerate their digital transformation journey.

References

Links used in research and developing: https://www.data-vault.co.uk/what-is-data-vault/